GraphHopper – A Java routing engine

karussell ads

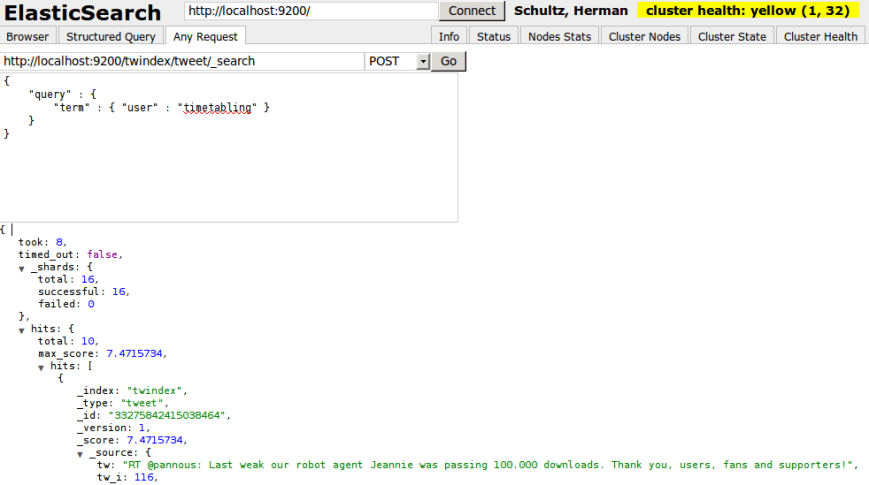

This article will show you the most basic steps required to make ElasticSearch working for the simplest scenario with the help of the Java API – it shows installing, indexing and querying.

1. Installation

Either get the sources from github and compile it or grab the zip file of the latest release and start a node in foreground via:

bin/elasticsearch -f

To make things easy for you I have prepared a small example with sources derived from jetwick where you can start ElasticSearch directly from your IDE – e.g. just click ‘open projects’ in NetBeans run then start from the ElasticNode class. The example should show you how to do indexing via bulk API, querying, faceting, filtering, sorting and probably some more:

To get started on your own see the sources of the example where I’m actually using ElasticSearch or take a look at the shortest ES example (with Java API) in the last section of this post.

Info: If you want that ES starts automatically when your debian starts then read this documentation.

2. Indexing and Querying

First of all you should define all fields of your document which shouldn’t get the default analyzer (e.g. strings gets analyzed, etc) and specify that in the tweet.json under the folder es/config/mappings/_default

For example in the elasticsearch example the userName shouldn’t be analyzed:

{ "tweet" : {

"properties" : {

"userName": { "type" : "string", "index" : "not_analyzed" }

}}}

Then start the node:

import static org.elasticsearch.node.NodeBuilder.*;

...

Builder settings = ImmutableSettings.settingsBuilder();

// here you can set the node and index settings via API

settings.build();

NodeBuilder nBuilder = nodeBuilder().settings(settings);

if (testing)

nBuilder.local(true);

// start it!

node = nBuilder.build().start();

You can get the client directly from the node:

Client client = node.client();

or if you need the client in another JVM you can use the TransportClient:

Settings s = ImmutableSettings.settingsBuilder().put("cluster.name", cluster).build();

TransportClient tmp = new TransportClient(s);

tmp.addTransportAddress(new InetSocketTransportAddress("127.0.0.1", 9200));

client = tmp;

Now create your index:

try {

client.admin().indices().create(new CreateIndexRequest(indexName)).actionGet();

} catch(Exception ex) {

logger.warn("already exists", ex);

}

When indexing your documents you’ll need to know where to store (indexName) and what to store (indexType and id):

IndexRequestBuilder irb = client.prepareIndex(getIndexName(), getIndexType(), id).

setSource(b);

irb.execute().actionGet();

where the source b is the jsonBuilder created from your domain object:

import static org.elasticsearch.common.xcontent.XContentFactory.*;

...

XContentBuilder b = jsonBuilder().startObject();

b.field("tweetText", u.getText());

b.field("fromUserId", u.getFromUserId());

if (u.getCreatedAt() != null) // the 'if' is not neccessary in >= 0.15

b.field("createdAt", u.getCreatedAt());

b.field("userName", u.getUserName());

b.endObject();

To get a document via its id you do:

GetResponse rsp = client.prepareGet(getIndexName(), getIndexType(), "" + id).

execute().actionGet();

MyTweet tweet = readDoc(rsp.getSource(), rsp.getId());

Getting multiple documents at once is currently not supported via ‘prepareGet’, but you can create a terms query with the indirect field ‘_id’ to achieve this bulk-retrieving. When updating a lots of documents there is already a bulk API.

In test cases after indexing you’ll have to make sure that the documents are actually ‘commited’ before searching (don’t do this in production):

RefreshResponse rsp = client.admin().indices().refresh(new RefreshRequest(indices)).actionGet();

To write tests which uses ES you can take a look into the source code how I’m doing this (starting ES on beforeClass etc).

Now let use search:

SearchRequestBuilder builder = client.prepareSearch(getIndexName());

XContentQueryBuilder qb = QueryBuilders.queryString(queryString).defaultOperator(Operator.AND).

field("tweetText").field("userName", 0).

allowLeadingWildcard(false).useDisMax(true);

builder.addSort("createdAt", SortOrder.DESC);

builder.setFrom(page * hitsPerPage).setSize(hitsPerPage);

builder.setQuery(qb);

SearchResponse rsp = builder.execute().actionGet();

SearchHit[] docs = rsp.getHits().getHits();

for (SearchHit sd : docs) {

//to get explanation you'll need to enable this when querying:

//System.out.println(sd.getExplanation().toString());

// if we use in mapping: "_source" : {"enabled" : false}

// we need to include all necessary fields in query and then to use doc.getFields()

// instead of doc.getSource()

MyTweet tw = readDoc(sd.getSource(), sd.getId());

tweets.add(tw);

}

The helper method readDoc is simple:

public MyTweet readDoc(Map source, String idAsStr) {

String name = (String) source.get("userName");

long id = -1;

try {

id = Long.parseLong(idAsStr);

} catch (Exception ex) {

logger.error("Couldn't parse id:" + idAsStr);

}

MyTweet tweet = new MyTweet(id, name);

tweet.setText((String) source.get("tweetText"));

tweet.setCreatedAt(Helper.toDateNoNPE((String) source.get("createdAt")));

tweet.setFromUserId((Integer) source.get("fromUserId"));

return tweet;

}

When you want that the facets will be return in parallel to the search results you’ll have to ‘enable’ it when querying:

facetName = "userName";

facetField = "userName";

builder.addFacet(FacetBuilders.termsFacet(facetName)

.field(facetField));

Then you can retrieve all term facet via:

SearchResponse rsp = ...

if (rsp != null) {

Facets facets = rsp.facets();

if (facets != null)

for (Facet facet : facets.facets()) {

if (facet instanceof TermsFacet) {

TermsFacet ff = (TermsFacet) facet;

// => ff.getEntries() => count per unique value

...

This is done in the FacetPanel.

I hope you now have a basic understanding of ElasticSearch. Please let me know if you found a bug in the example or if something is not clearly explained!

In my (too?) small Solr vs. ElasticSearch comparison I listed also some useful tools for ES. Also have a look at this!

3. Some hints

- Use ‘none’ gateway for tests. Gateway is used for long term persistence.

- The Java API is not well documented at the moment, but now there are several Java API usages in Jetwick code

- Use scripting for boosting, use JavaScript as language – most performant as of Dec 2010!

- Restart the node to try a new scripting language

- Use snowball stemmer in 0.15 use language:English (otherwise ClassNotFoundException)

- See how your terms get analyzed:

http://localhost:9200/twindexreal/_analyze?analyzer=index_analyzer “this is a #java test => #java + test”

- Or include the analyzer as a plugin: put the jar under lib/ E.g. see the icu plugin. Be sure you are using the right guice annotation

- You set port 9200 (-9300) for http communication and 9300 (-9400) for transport client.

- if you have problems with ports: make sure at least a simple put + get is working via curl

- Scaling-ElasticSearch

This solution is my preferred solution for handling long term persistency of of a cluster since it means

that node storage is completely temporal. This in turn means that you can store the index in memory for example,

get the performance benefits that comes with it, without scarifying long term persistency.

- Too many open files: edit /etc/security/limits.conf

user soft nofile 15000

user hard nofile 15000

! then login + logout !

4. Simplest Java Example

import static org.elasticsearch.node.NodeBuilder.*;

import static org.elasticsearch.common.xcontent.XContentFactory.*;

...

Node node = nodeBuilder().local(true).

settings(ImmutableSettings.settingsBuilder().

put("index.number_of_shards", 4).

put("index.number_of_replicas", 1).

build()).build().start();

String indexName = "tweetindex";

String indexType = "tweet";

String fileAsString = "{"

+ "\"tweet\" : {"

+ " \"properties\" : {"

+ " \"longval\" : { \"type\" : \"long\", \"null_value\" : -1}"

+ "}}}";

Client client = node.client();

// create index

client.admin().indices().

create(new CreateIndexRequest(indexName).mapping(indexType, fileAsString)).

actionGet();

client.admin().cluster().health(new ClusterHealthRequest(indexName).waitForYellowStatus()).actionGet();

XContentBuilder docBuilder = XContentFactory.jsonBuilder().startObject();

docBuilder.field("longval", 124L);

docBuilder.endObject();

// feed previously created doc

IndexRequestBuilder irb = client.prepareIndex(indexName, indexType, "1").

setConsistencyLevel(WriteConsistencyLevel.DEFAULT).

setSource(docBuilder);

irb.execute().actionGet();

// there is also a bulk API if you have many documents

// make doc available for sure – you shouldn't need this in production, because

// the documents gets available automatically in (near) real time

client.admin().indices().refresh(new RefreshRequest(indexName)).actionGet();

// create a query to get this document

XContentQueryBuilder qb = QueryBuilders.matchAllQuery();

TermFilterBuilder fb = FilterBuilders.termFilter("longval", 124L);

SearchRequestBuilder srb = client.prepareSearch(indexName).

setQuery(QueryBuilders.filteredQuery(qb, fb));

SearchResponse response = srb.execute().actionGet();

System.out.println("failed shards:" + response.getFailedShards());

Object num = response.getHits().hits()[0].getSource().get("longval");

System.out.println("longval:" + num);