I like both technologies Solr and ElasticSearch and a lot work is going into both. So, let me explain why I choose to migrate from Solr to ElasticSearch (ES).

What is elastic?

- ES lets you add and remove nodes [Video] and the requests will be handled from the correct node. Nodes will even do ‘zero config’ discovery.

To scale if the load increases you can use replicas. ElasticSearch will automatically play the loadbalancer and choose the appropriated node.

- ES lets you scale if data amount increase, because then you can easily use sharding: it’s just a number in ES (either via API or via configuration).

With that features ES is well prepared for the century of the cloud [Blog]!

What’s the difference to Solr?

Solr wasn’t designed from the ground up with the ‘cloud’ in mind, but of course you can do sharding, use replication and use multiple cores with Solr. It’s just a bit more complicated.

When using Solr Cloud and ZooKeeper this gets better. You’ll also need to invest some time to make Solr near real time to be comparable with ES. This all seemed to be a bit too tricky to me (in Dec 2010) and I don’t have any time for administration work in my free time e.g. to set up backups/replicas, add shards/indices, …

Other Options?

What are my other options? There is Xapian, Sphinx etc. But only the following two projects fullfilled my requirements:

- Using Solandra or

- Moving from Solr to ElasticSearch

I wanted a lucene based solution and a solution where it works out of the box to shard and create indices. I simply wanted more data from Twitter available in Jetwick.

The first option is very nice, no changes to your code are required – only a minor change in your solrconfig.xml and you will get a distributed and real time Solr! So, I tried Solandra and after a lot support from Jake (Thanks!) I got it running with Jetwick! But at the end I still had performance issues with my indexing strategy, so I tried – in parallel – the second step.

What are the advantages of ElasticSearch?

To be honest Jetwick doesn’t really need to be elastic – I’m only using the sharding feature at the moment as I don’t own capacity on a cloud. BUT ElasticSearch is also elastic in a different area: ES lets you manage indices very very easy! A clever thing in ES is that you don’t define the document structure in an index like you do in Solr – no, you define types and then create documents of a specific type in a specific index. And documents in ES don’t need to be flat – they can be nested as they are pure JSON.

That and the ‘elasticity’ could make ES suitable as a hip NoSql storage 😉

Another advantage over Solr is the near real time behaviour, which you’ll get at no costs when switching to ES.

The Move!

Moving to ElasticSearch with Jetwick wasn’t that easy as I hoped. Although I’m sure one can make a normal migration in one day with my experience now ;). It took a lot of time to understand the new technology and more importantly to migrate my UI code where I made too much use to construct a SolrQuery object. At the end I created a custom Solr2ElasticHelper utility to avoid this clumsy work at the beginning. And at some day I will fully migrate even this code. This is now migrated to my own query object which makes it easy for me to add and remove filters etc.

When moving to ElasticSearch be sure that it supports all feature Solr has. Although Shay works really hard to integrate new features into ES he cannot do all the work alone! E.g. I had to integrate Solrs’ WordDelimiterFilter, but this wasn’t that difficult – just copy & paste; plus some configuration.

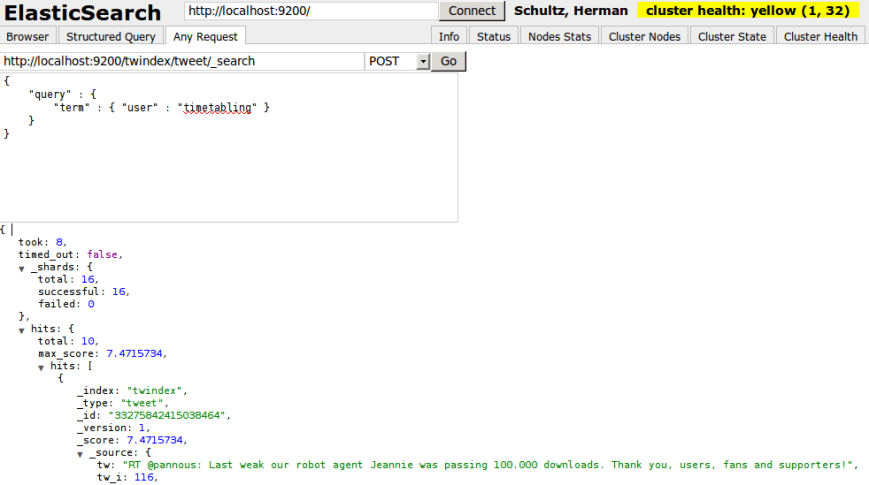

ES uses netty under the hood – no other webserver is necessary. Just start the node either via API or in directly via bin/elasticsearch and then query the node via curl or the browser. For example you can use the nice ElasticSearch Head project:

or ElasticSearch-JS which are equivalents to the Solr admin page. To add a node simply start another ES instance and they will automagically discover each other. You can also use curl on the command line to query and feed the index as documented in the REST API documentation.

No technology is perfect so keep in mind the following disadvantages which will disappear over time in my opinion:

- Solr has more Analyzers, Filters, etc., but it is relative easy to use them in ES as well.

- Solr has a larger community, a larger user base and more companies offering professional support

- Solr has better documentation and more books. Regarding the docs of ES: they are moving now to the github wiki and the docs will now improve IMO.

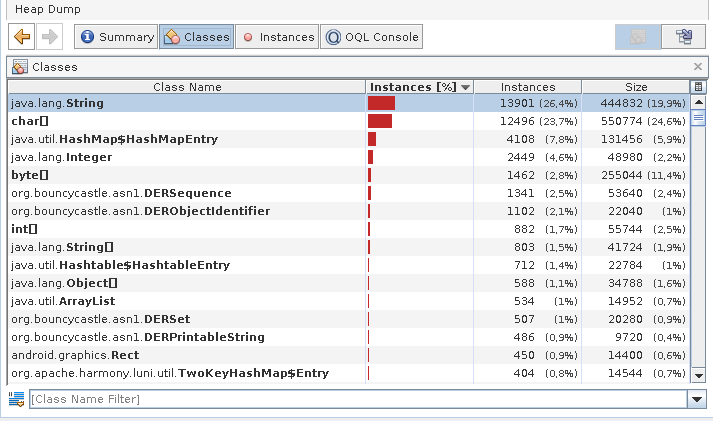

- Solr has more tooling e.g. solrmonitor, LucidGaze and newrelic, but you still have yourkit and jvisualvm 😉

But keep in mind also the following unmentioned notes:

- Shay fixes bugs very quickly!

- ElasticSearch has a more recent Lucene version and releases more frequently

- It is very easy to contribute via github (just a pull request away ;))

To get introduced into ElasticSearch you can read this article.