“Everybody (incl. me) is laughing at you JavaScript” – Me (before the year ~2003)

“It’s time to laugh back!” – JavaScript

Wouldn’t it be amazing to make all your programming tasks in one programming language? No need to switch? No need to relearn?

I dreamed that dream with Java. Sadly the Java-plugin is not the future (not user friendly, not search engine friendly, …) and ordinary web development can be done with pure Java solutions such as Vaadin/GWT or Wicket, but at some point you will need to know JavaScript.

With node.js – although this is not the first server side js solution – it is possible to do all your tasks in JavaScript! Plus a bit css and html knowledge, of course. But can we save files or querying databases with pure javascript? node.js has the goal to provide an implementation for such a server side API and designed its API to be non-blocking. Another interesting feature are web sockets: node.js makes it possible to directly communicate from server to client (and back) with pure JavaScript. So you can send js- or json-snippets back and forth. Check out one amazing example of this. Behind the scenes of node.js the V8 acts as virtual javascript machine and makes it all amazingly fast.

What has this all to do with full text search?

To better understand things I need to code them. Now, that I wanted to get a better understanding of an inverted index – I had to code it. But implementing it in Java would have been boring, because there already is the near-perfect Lucene. So I choose JavaScript: I wanted to get a better understand of this language and I wanted to try node.js.

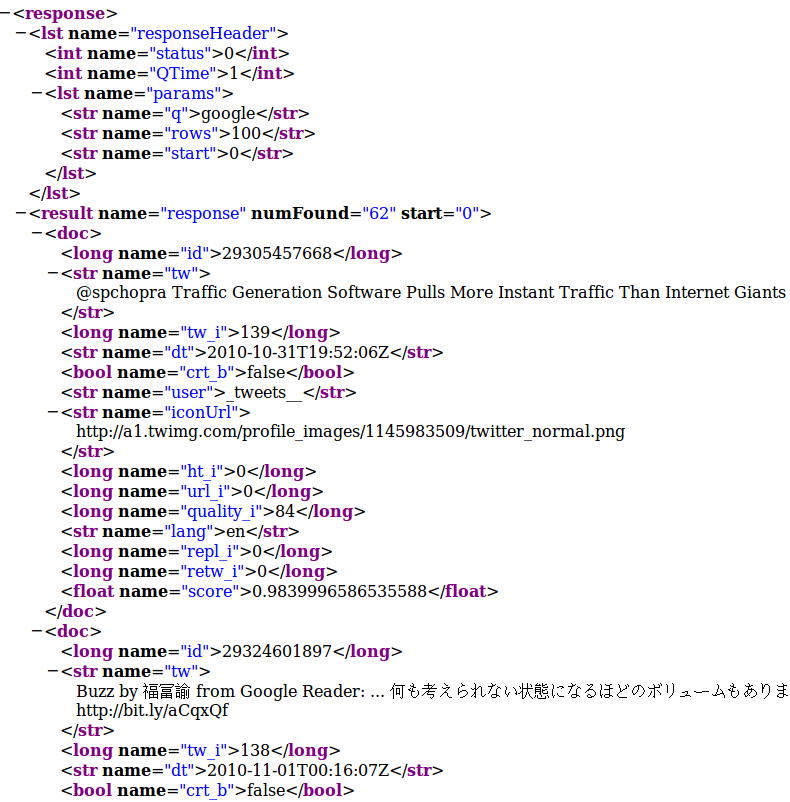

In my upcoming blog post I will write about JSii – an inverted index implementation in javascript (apache 2 licensed). The index is not limited to node.js – you can use it in the browser. But more interestingly it can be queried via http and even SolrJ – in that case node.js hosts the inverted index.

But why is a search engine in JavaScript useful? First of all, it is a prototype and I learned a lot. Second, you can check it out and learn something more and think about other possibilites/ideas.

Third, I can imagine of the following scenario – reducing the need of “server-side” architectures. You can call it “ad-hoc peer to peer networks over HTTP”. Imagine a user which visits your website is willing to give you for some minutes or seconds a bit of its browser-RAM and CPU. Then you can push some minor parts of your data (in our case a search index) to the users’ browser and include it into your network. This architecture is extremly difficult to manage. You will have to avoid that users think your site is compromised by malware. But this network will work better/faster/more reliable/… the more users visits your site!

A similar concept is used in electric power transmission. Centralized power stations produces the predictable ‘source of energy’. In the future decentralized power stations such as wind turbines, solar plants, etc will pop up for hours or even minutes and contribute its energy to the world wide energy network.

We will see what the future will bring for the IT sector. But there is no doubt: the future of JavaScript is bright.

This minor stunt is nothing comparable to Danny but hey, its great fun! If you want to see the king of trial click here. If you have something special to you which makes fun or is difficult (etc) please comment or add a video reply.

This minor stunt is nothing comparable to Danny but hey, its great fun! If you want to see the king of trial click here. If you have something special to you which makes fun or is difficult (etc) please comment or add a video reply.